Hey Google, what can you do? However simple your question is, there is a complex process running discretely in the background to bring you the best answer. But what decides the best answer of all answers? Another simple answer, machine learning. My team is using it to solve our clients’ most complex business problems. Using SAP Data Intelligence (DI) and SAP’s Business Technology Platform, we can orchestrate data and develop complex machine learning pipelines that integrate seamlessly with SAP Enterprise Resource Planning (EPR) systems. This article will explore how we used the popular search ranking algorithm Term Frequency – Inverse Document Frequency (TF-IDF) in SAP DI to build artificially intelligent material and customer search functionality to make finding your data easy and simple.

One of the primary concerns of a wholesaler is the ability to sell efficiently. Wholesalers handle orders with thousands of line items and manage thousands of materials and customers within their ERP systems. Complex orders require a Customer Sales Representative to search through massive amounts of customer and/or material data within their system. This can prove to be a tedious process when little identifying information is known, or a poor description is provided. Additionally, customers often label materials differently than the vendors’ internal system. Among hundreds of similar materials, how do you find exactly what your client needs? In the fall of 2020, a client approached our team with the challenge of building an Artificially Intelligent material search tool. Like a Google Search, but for their internal material (aka MARA) SAP database.

The client’s internal team had attempted to retrieve materials using a hierarchical subject classification scheme in which material would be identified by sorting through multiple classifications. Like a dichotomous key, a material, like an exotic frog, could be identified according to its unique characteristics. However, due to their expansive set of material data and the poor descriptions provided by clients, they struggled to establish a classification system that was robust enough to classify the essential characteristics. Additionally, it proved difficult for the model to interpret a description in which the descriptive words would not interfere with the recognition of the object. For example:

½” x 4 SMLS NIP Brass Pipe Ring, ½” 2000# Valve (TL Series) ½” SS Barstock Hex Plug

The above example description would have been misinterpreted by the funnel model by identifying Pipe Ring as the primary object. Following the logic of the funnel system, the model would drill down into the Ring classification to further match characteristics. However, this material description is for a Hex Plug which would belong to an entirely different classification of Plug materials. This method meant that the suggested materials were from an entirely different division and far from the legitimate material.

To address these issues, our team used Term Frequency – Inverse Document Frequency (TF-IDF), a feature from the scikit-learn library to tokenize and evaluate individual words. Using the parameter token_pattern = r’\S+’, we were able to split text based on spaces and punctuation and tokenize any text (a string) with a length of 1 or more alphanumeric characters. We then used the ngram_range=(1,2) parameter to accept tokens of both unigrams and bigrams meaning, strings that consisted of one and two words. These parameters greatly improved our ability to specify exactly what does and does not constitute as a token. Removing single alphanumeric strings cleans the data of useless tokens like “a” and “’s” which create noise and decrease performance. Additionally, tokens with three or more words create complex tokens that decrease performance and defeat the purpose of using tokenization. If a material description of 8 words is split into two different tokens, each with 4 words, a quadgram, it is not very likely that those quadgrams have identical matches to the internal system. If you split the description of 8 words into 7 bigrams it is much more probable that a direct match will be made. The following example shows the value of setting the n_gram range to (1, 2).

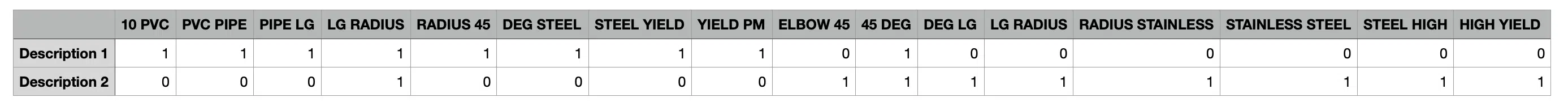

Customer’s Descriptions:

Description 1: “10 PVC PIPE LG RADIUS 45 DEG STEEL YIELD PM”

Description 2: “ELBOW 45 DEG LG RADIUS STAINLESS STEEL HIGH YEILD PM”

Tokens:

10 PVC

PVC PIPE

PIPE LG

LG RADIUS

RADIUS 45

DEG STEEL

STEEL YIELD

YIELD PM

ELBOW 45

45 DEG

DEG LG

LG RADIUS

RADIUS STAINLESS

STAINLESS STEEL

STEEL HIGH

HIGH YIELD

Using the parameters of TF-IDF to tokenize material descriptions provides a significant advantage in performance through breaking text into bite-sized chunks. These chunks are much more likely to be effectively matched than entire descriptions. By breaking apart and reforming material descriptions, the search can yield much stronger results than the attempted funnel search approach.

Once the TF-IDF parameters have tokenized the description, we are able to vectorize the results. The x-axis is represented by the tokens. The y axis is represented by the material descriptions. If a token is present in that material description, the matrix has a 1 between the description and token. If it does not, the value is 0. From the above example the matrix would look like this:

ML Demo

ML DemoOnce our descriptions are tokenized and their frequencies are represented by 1s and 0s, we can apply algorithms to measure and prioritize tokens based on their frequency in the descriptions.

The algorithm measures importance by comparing a word’s frequency within a description inversely to its frequency within the dataset. If a word appears multiple times within a single material description, the algorithm will consider it more important. Words that appear less often in a single description are identified as less important. The frequency of a word within a description is called Term Frequency. Let’s look at another example:

VALVE, 3/4″ BALL FLOATING THREADED 3600 PSI FULL PORT SS BODY GRAPHITE SEALS DELRIN SEATS FIRE SAFE SS LATCH LOCK LEVER OPER SERIES S3-SS QUADRANT VALVE S3FSSAFSLTTO75

The above material description has the word valve twice. Because its frequency in that description is 2 and is more frequent than all the other words, the algorithm will consider valve more important. Again, 2 is the term frequency of the word valve.

TF-IDF also considers the frequency of a word within the corpus. The corpus is the entire dataset of material descriptions. If a word is common amongst the corpus, the algorithm will decide it is less important. In NLP (natural language processing) this is extremely valuable for identifying words like “a” and “the” which occur frequently but provide no context. Likewise, if the word is highly specific and scarce amongst the corpus, the algorithm will identify it as more important. Back to our prior example, if this is the only description in the entire dataset that includes the word valve, it will be considered very important. This is called Document Frequency.

You may remember, the I in TF-IDF stands for the inverse. This means the algorithm compares the term frequency to the inverse of the document frequency. If a word’s term frequency is low and its document frequency is high, it is not important. If a word’s term frequency is high and its document frequency is low, it is very important. Still with me? Here is the same concept written as an equation:

Term Frequency

_______________

Document Frequency

As you can see, TF-IDF plays a huge role in natural language processing. The ability to calculate the importance of a word relies heavily on measuring its significance in context and in overall occurrence. Using TF-IDF in SAP DI creates metrics that can be used to further analyze material descriptions allowing us to efficiently match material and customer data across ERP systems. These capabilities have allowed us to improve our search result suggestions, improve type-ahead functionality, and have been the foundation of additional AI development such as automating purchase order entries using Optical Character Recognition (OCR) tools.

Excellent explanation – thank you!